Images are to object detection, just as videos are to...

If you thought about object tracking, you guessed correctly. On a fundamental level, an image is a visual snapshot, and a video is a sequence of images, so what makes object tracking different from object detection?

This article discusses the underlying concept behind object tracking and what differentiates it from object detection. Then, it highlights the challenges associated with implementing object tracking and how to overcome them.

How does object tracking work?

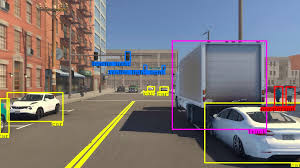

Object tracking involves using computer vision algorithms to monitor the motion or location of objects in moving images. Object labeling and motion tracking are the two major concepts in object tracking but are absent in object detection.

- Object labeling: In object tracking, each detected object in the frame is given a unique label, and its location coordinates are stored.

- Motion tracking: The stored location coordinates are then used to estimate the object's future location and thus track it.

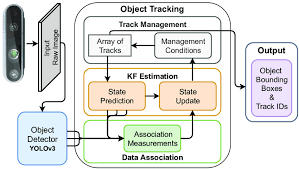

Becoming familiar with the object tracking workflow is critical to comprehending object tracking and how it may be used to address common problems. While workflows may vary between models and use situations, an object-tracking model's technical workflow is as follows:

1. Object Detection

This is the foundational algorithm behind object tracking. Here, objects of interest are identified in a given frame. Object detection is performed using traditional methods such as background subtraction, frame differencing, and so on, or deep learning-based methods such as CNNs, YOLO, or even R-CNN.

2. Feature Extraction

Once objects are detected, the next step is to extract features from the objects that can help in tracking. Features could include:

- Appearance features: Colour, texture, shape, etc.

- Motion features: Velocity, direction, etc.

- Deep features: Features extracted from intermediate layers of deep neural networks.

3. Object Association

This step involves linking the detected objects across frames. Techniques used for object association include:

- Data association algorithms: Data association algorithms link detected objects across frames by matching objects in successive frames based on their predicted locations and appearance features, ensuring continuity in tracking. This ensures continuity and accurate tracking of objects over time. Examples include the Hungarian algorithm, Global Nearest Neighbors, and Joint Probabilistic Data Association.

- Kalman filters: They are used to predict an object's future location based on its previous states and measurements.

- Particle filters: They provide a probabilistic framework for tracking multiple objects.

- Deep Learning Approaches: Recurrent neural networks (RNNs), LSTM (Long Short-Term Memory) networks, or graph neural networks (GNNs) are used for temporal association of objects.

4. Tracking Algorithms

These are the main algorithms behind object tracking. They are used to track the motion of an identified object over various frames. Some of the popular tracking algorithms used for object tracking are:

- Single Object Tracking (SOT): This focuses on tracking a single object. Examples include CSRT (Discriminative Correlation Filter with Channel and Spatial Reliability), KCF (Kernelized Correlation Filter), and MOSSE (Minimum Output Sum of Squared Error) trackers.

- Multiple Object Tracking (MOT): This focuses on tracking multiple objects simultaneously. Techniques include:some text

- SORT (simple online and real-time tracking): It combines object detection and Kalman filtering.

- DeepSORT: It extends SORT with appearance descriptors.

- Tracktor: It uses object detectors for both detection and tracking.

5. Handling Occlusions and Re-Identification

While detecting an object's motion in a sequence of frames, the object can be blocked, obscured, or appear partially in a frame. Situations like this, where the objects overlap or leave and re-enter the frame, are critical pain points that object tracking models can find challenging to handle. Thus, occlusion handling and re-identification algorithms are crucial to successfully tracking the objects.

Occlusion handling techniques involve using multiple cameras, leveraging depth information, and employing robust feature descriptors. Re-identification algorithms include matching objects that exit and re-enter the frame using appearance features, deep learning models, and metric learning techniques.

6. Post-Processing

After tracking, the results may then be further refined using post-processing techniques such as:

- Trajectory Smoothing: This removes jitter and noise from the tracked paths.

- Behavior Analysis: This analysis tracks objects' movement patterns and behavior and helps predict their next motion.

Challenges of Object Tracking

Object tracking shows great promise in automating many manual tasks across various sectors, such as the military, healthcare, manufacturing, etc. However, some important factors must be considered when implementing an object-tracking solution. These challenges impede the solution's accuracy and effectiveness, and some concepts can be implemented to overcome them.

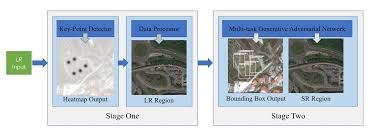

Variation in object sizes: One of the significant challenges in object tracking is dealing with variations in object sizes. Objects can appear larger or smaller depending on their distance from the camera, perspective changes, or zoom levels. This variability can complicate the tracking process, as the tracker must continuously adapt to these changes to maintain accuracy. Traditional algorithms often struggle with this aspect, leading to loss of tracking or drift over time.

To overcome this challenge, modern tracking algorithms incorporate scale-adaptive mechanisms. For example, the CAMShift algorithm adjusts the size of the search window based on the object's size in each frame. Similarly, deep learning-based approaches like SiamRPN (Siamese Region Proposal Network) integrate region proposal networks that dynamically adjust to the object's scale. These methods employ multi-scale feature extraction and learning adaptive scaling parameters to improve tracking when there are size variations.

Object occlusion: When the tracked object is partially or fully obscured by other objects or entities, the tracking process is interrupted. This is known as occlusion. It is particularly challenging in crowded scenes or dynamic environments where multiple objects interact. During occlusion, the tracker might lose sight of the object, resulting in identity switches or the loss of the object entirely.

To address occlusion, you can utilize advanced tracker techniques like motion prediction and re-identification. Kalman and particle filters can predict the object's position during temporary occlusions, allowing the tracker to re-acquire the object once it reappears. Deep learning-based approaches, such as DeepSORT, incorporate appearance models that use visual features to re-identify objects after occlusion. Additionally, incorporating contextual information from surrounding objects and leveraging temporal consistency can enhance the tracker's ability to handle occlusions effectively.

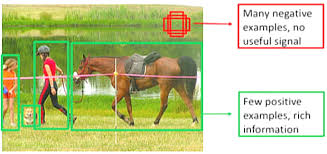

Object Class Imbalance: Object class imbalance occurs when the frequency of different object classes varies significantly in the training data. This imbalance can lead to biased models that perform well in frequent classes but poorly in rare ones. In object tracking, this challenge manifests when particular objects are tracked accurately while others are consistently misidentified or lost.

Addressing object class imbalance involves strategies such as data augmentation and re-sampling. Augmenting the dataset with synthetic examples of underrepresented classes can help balance the training data. You can also apply techniques like oversampling the minority class or undersampling the majority class. Additionally, using loss functions that penalize misclassifications of rare classes more heavily, or employing ensemble methods that combine multiple models, can improve performance across all object classes.

Low Frame Resolution: Low frame resolution presents a challenge by reducing the temporal granularity of the video, making it harder to track fast-moving objects. With fewer frames per second, less information is available to accurately estimate the object's trajectory, leading to potential gaps in the tracking sequence and higher chances of losing the object.

Interpolation techniques can generate intermediate frames, providing a higher temporal resolution for tracking. Predictive models, such as those using Kalman filters or recurrent neural networks (RNNs), can estimate the object's position between frames, maintaining continuity in the tracking process. Combining spatial and temporal information from higher-level features can also enhance the robustness of trackers, even in scenarios with low frame rates.

Object tracking in computer vision is a transformative technology that goes beyond object detection by continuously monitoring and predicting the movement of objects across video frames. Despite challenges like object occlusion and varying resolutions, advancements in adaptive algorithms and feature extraction techniques ensure robust and accurate tracking. This technology significantly enhances efficiency, safety, and operational effectiveness across multiple domains, underscoring its critical role in modern applications.

.png)